China just dropped a bombshell in the AI arms race, and Silicon Valley is just losing it.

What’s popping: DeepSeek, a virtually unknown Chinese AI lab, built an open-source language model that outperforms heavyweights like OpenAI’s GPT-4o. Virtually overnight DeepSeek is now a top 10 global model across rankings.

Here’s the kicker: DeepSeek took only two months to get to this level, with less than $6 million spent, and was built using downgraded Nvidia chips that the U.S. thought would hobble China’s AI ambitions.

Compare that to the $100 million+ it cost to train something like GPT-4. And all this while hundreds of billions are being spent by US tech to scale AI infrastructure.

Silicon Valley’s panic is palpable. It raises 2 questions:

- If a relatively small lab can do this, what’s stopping China’s AI ecosystem from leapfrogging the U.S.

- Does it even make sense to invest so much on AI infrastructure?

How did they pull this: DeepSeek leaned on an approach called "model distillation"—training a smaller, cheaper model using a large, expensive one. Think of it as a talented apprentice learning the craft by shadowing a master artisan. Efficient, precise, and, as it turns out, game-changing.

The bigger picture is unsettling for Washington. The export controls meant to choke China’s AI progress, and limit access to NVIDIA chips, haven’t exactly worked.

The other side: some of Silicon Valley’s who’s who simply say that China is lying, and that they have in fact gotten their hands on thousands of NVIDIA GPUs but wont let that come out fearing Washington’s scorn.

Meanwhile, analysts say that this could be the Black Swan event that could unravel the NVIDIA trade.

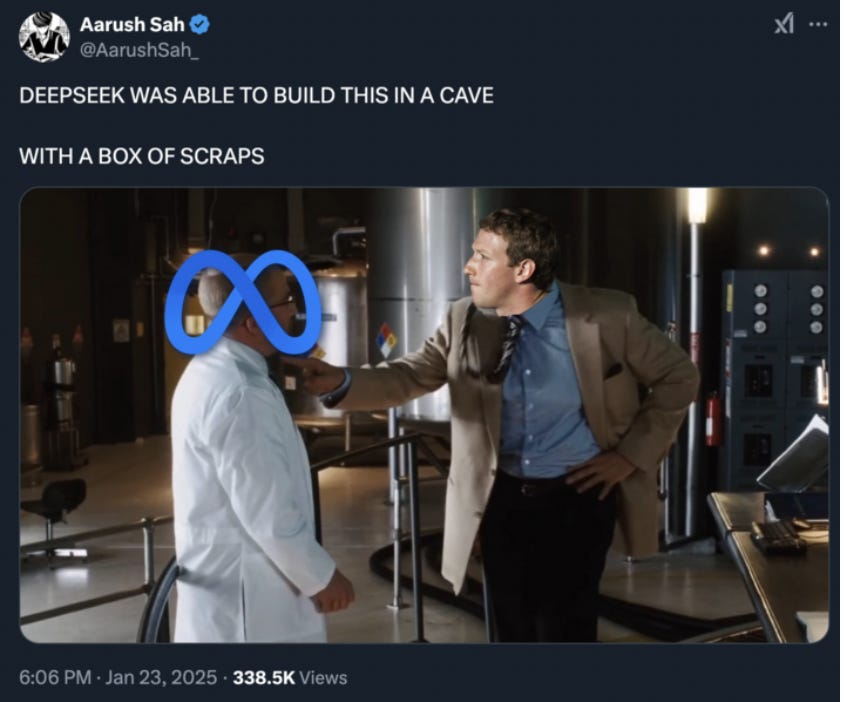

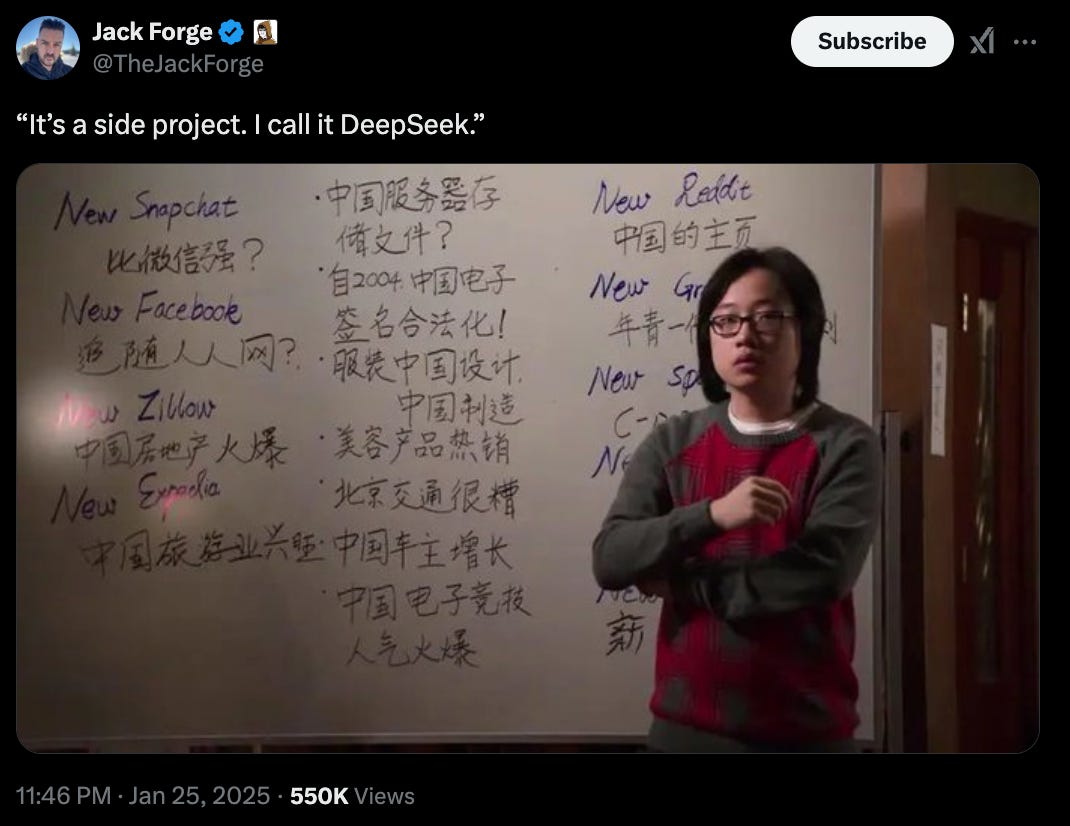

Also, Twitter had a field day with the memes. Some of our favorite ones.